Cover photo: Rio Tinto-Kennecott’s Bingham Canyon copper mine in Utah, the US, has extracted more copper than any mine in history: about 18.7 million tons. CC arbyreed

I hear a notification bell. I look at my phone and see the notification is from formerly-known-as-Twitter, X: “Come join the UNESCO AI Ethics team!” Curious, I open the notification and read the job posting. They are looking for a consultant to “advance the implementation of UNESCO’s Recommendation on the Ethics of AI,” especially as it relates to “ensuring that the benefits of AI technologies are available and accessible to all, taking into consideration the specific needs of different age groups, cultural systems, different language groups, persons with disabilities, girls and women, and disadvantaged, marginalized and vulnerable people or people in vulnerable situations.”

I then read that the scope of the work is, in fact, specifically focused on gender and that–among other things–the aim is to ensure that “the potential for artificial intelligence to contribute to achieving gender equality is fully maximized.” While I read this sentence, I am amazed (but not surprised) that a job posting about “AI Ethics” implies that AI has anything to contribute to achieving gender equality. I tell myself this is precisely why we should talk about AI Power instead of AI Ethics.

AI is not inevitable, nor is it a given. Yet, there is a growing trend in the field of “AI Ethics” to maximize AI’s positive effects while mitigating its inherent risks, as if to make the most out of something that cannot be avoided. However, as Kate Crawford notes in her book Atlas of AI (2021), the very first thing to do when even considering employing AI is to look at the power infrastructure that produces it and makes its existence possible in the first place. As Crawford notes, AI is the result of extracting human life through data, raw materials, and labor. She maps out a series of specific elements that enable the design and deployment of AI, namely (1) Earth, (2) Labor, (3) Data, (4) Classification, and (5) State. To each of these, she dedicates a chapter of the book.

Environment

Crawford begins charting the Atlas by questioning the widely held belief that “data is the new oil,” a comparison typically drawn to highlight the economic value of data. Instead of following this conventional narrative, Crawford shifts the focus to the extractive nature of both technologies. She draws parallels between “data mining” and the physical extraction of oil, pointing out that both processes are inherently linked to environmental degradation, the exploitation of human labor, and significant impacts on society as a whole.

Taking San Francisco as an example of a city built on land appropriation and mining, Crawford notes how the benefits of mining have historically been enjoyed only by a small minority while the vast majority paid their costs. “Tornado, flood, earthquake and volcano combined could hardly make greater havoc, spread wider ruin and wreck than [the] gold-washing operations. . . . There are no rights which mining respects in California. It is the one supreme interest,” Crawford quotes a tourist describing California in 1869. Exploring how the mining sector exploited human labor and natural resources, the book traces parallels between the ideologies that drove the construction of San Francisco city–home to some of the richest and most powerful tech companies in the world–and the ones that drive the AI industry today.

To materially illustrate this, the book brings the reader to Silver Peak, Nevada, the only functioning lithium mine in the United States: “…a place where the stuff of AI is made”. There, batteries for phones, cars, laptops, power banks, surveillance and alarm systems become attainable. There, AI falls from its ethereal cloud of immaterial deity and becomes evidently material. People are forced to leave their land, entire ecosystems are destroyed, and workers risk their lives to extract very material, tangible resources. In Crawford’s words, “Each object in the extended network of an AI system, from network routers to batteries to data centers, is built using elements that require billions of years to form inside the earth”.

Once a smartphone has been physically produced by extracting rare minerals, Crawford notes that, according to the Consumer Technology Association, the average smartphone lifespan is a mere 4.7 years. Once digital products have been consumed and have to be discarded, they end up in e-waste dumping grounds, typically in the Global South. Once again, the people and ecosystems that benefit the least from these devices’ development and employment seem to be the ones paying the highest price for them.

Another natural resource essential to the AI industry is water. As Crawford reports, one of the biggest data centers in the US–which belongs to the National Security Agency (NSA)–has been estimated to consume 1.7 million gallons (6,435,197 liters) of water per day.

Besides water, Crawford also notes that data centers alone are estimated to consume between 1% and 2% of global energy annually, a figure that is rising alongside the expanding use of computing, which in turn increases greenhouse gas emissions (GHG).

Throughout its whole lifecycle, AI is powered by electrical energy. The electricity powering AI systems’ hardware infrastructure comes from a mix of energy sources like coal, petroleum, natural gas, and low-carbon alternatives. This mix varies by region, leading to significant differences in carbon emissions. For instance, emissions could be as low as 20g CO₂eq/kWh in Quebec, Canada, or as high as 736.6g CO₂eq/kWh in Iowa, the USA.1

Developing a sophisticated machine-learning model typically involves operating several computing devices simultaneously over extended periods, spanning days or even weeks. A study by Emma Strubell, Ananya Ganesh, and Andrew McCallum shows that a neural architecture search for training a natural language processing (NLP) model can result in emissions exceeding 626,000 pounds of CO₂-equivalent. This is approximately five times the total lifetime emissions of an average car in America. With advanced models like ChatGPT, which contain millions of parameters and require training across multiple GPUs for weeks, the carbon emissions can surge by hundreds of kilograms of CO₂ equivalent.

However, these estimates are often optimistic. As Strubell, Ganesh, and McCallum note, they do not include the true commercial scale at which Big Tech companies operate. The exact amount of energy consumed by the tech sector is an unknown corporate secret, like the amount of water consumed by the NSA-owned data center mentioned above. Just like the mineral mines are physically far from the city residents’ eyes, and the e-dumps are in remote locations (from the tech industry that drives the e-waste) where globally marginalized people reside, the energy consumption of AI is purposefully kept invisible to most of the people who enjoy its perks.

Human Labor

Another extracted resource often made invisible in the AI industry is human labor. Indeed, mineral extraction is problematic not only in terms of its environmental costs but also in terms of perpetuating poor working conditions. Amnesty International has shed light on child labor and hazardous working conditions inside mines in the Democratic Republic of Congo, emphasizing the human cost behind lithium-ion batteries.

The push for efficiency through technological advancements can lead to job displacement, intense worker surveillance, and inhumane working conditions.

Furthermore, Crawford uses Amazon as a case study to explore the impact of automation on the workforce. She illustrates how the push for efficiency through technological advancements can lead to job displacement, intense worker surveillance, and inhumane working conditions. Suddenly, the employee finds their work being dictated by the automated system rather than the system serving to support the employee’s needs.

In the context of the relationship between labor and AI, Crawford also touches upon the crowdsourced labor that trains AI. In the AI industry, crowdsourced labor refers to the tasks performed by vast numbers of people, often without direct compensation, that contribute to the “training” of AI systems. These tasks range from transcribing audio files for natural language processing models to labeling images to improve computer vision algorithms. Crowdsourced labor can also involve content moderation, which Sarah Roberts investigates in depth in her book Behind the Screen: Content Moderation in the Shadows of Social Media, showing the psychological toll on workers who filter harmful online content and highlighting PTSD risk among content moderators.

Despite its critical role in refining AI technologies, this form of labor is rarely acknowledged or compensated like traditional work. Moreover, the case of crowdsourced labor underscores a critical paradox in AI development: the reliance on human intelligence to create systems that are often celebrated for their “artificial” intelligence. This irony points to the need for a re-evaluation of the narratives surrounding AI, which–as Crawford argues–is, in fact, neither artificial nor intelligent.

Data and Classification

But what feeds an AI system? What are its decisions based upon? The simple but accurate answer would be: data.

Scholars in the field of critical data studies have long criticized the underpinnings of the notion of “raw data”, tracing the concept back to a positivist discourse of dataism. Building on poststructuralist theories questioning that “presence and unity are ontologically prior to expression,” they conceptualize data and the resulting AI systems as the material embodiment of situated knowledges.

In her book Weapons of Math Destructions (2016), Cathy O’Neil notes how data materializes “human prejudice” and reflects the knowledge, biases, and experiences of those who collect, manage, and analyze it. In fact, data does not simply exist. Instead, it is imagined, collected, and interpreted. Each of these stages requires a degree of categorization that ultimately leads to hierarchical systems of quantified information. Indeed, as noted by Durkheim and Mauss in their book Primitive Classification (1969), “every classification implies a hierarchical order”.

Crawford dedicates a whole chapter to the power dynamics that result from the classification (i.e., standardization and quantification) of human and non-human worlds. In fact, numerous scholars have been increasingly calling attention to the categorization processes at the heart of data-driven technologies. They warn that these processes reflect, produce, and reproduce a “one-world ontology” that prioritizes uniform, measurable, and generalizable knowledge systems over diverse, local, and subjective forms of understanding.

This modern “knowledge regime” can be traced back to Cartesian dualism, which distinctly separates the mind from the body and, by extension, humans from nature. Originated and prevalent in Western societies, this dominant framework to know the world is at the heart of the AI industry. As noted by Paula Ricaurte, “Data-driven rationality is supported by infrastructures of knowledge production developed by states, corporations, and research centers situated mainly in Western countries…”.

When encoded into the system, this dominant framework to know the world inevitably results in the development of a “standard” and the automated exclusion of everything that does not conform to it. As a consequence, data-driven technologies reproduce the power dynamics that organize society, thus tending to ultimately “punish the poor and the oppressed in our society, while making the rich richer.” In her book Race After Tech (2019), Ruha Benjamin addresses the increasing adoption of automated decision-making systems, contextualizing it within the history of systemic racism in the United States. More specifically, she offers a lucid analysis of how racial and gender discrimination are not only “an output of technologies gone wrong, but also . . . an input, part of the social context of design processes”, and thus the expression of a pre-existent, structurally oppressive social order.

Crawford takes affect recognition machines as an example to illustrate how data-driven systems do not fail (for instance, to recognize a Black person’s face as angry more likely than it would a white person’s) only due to a lack of representative data–which is in itself an issue that we will explore in the last section of this review. More fundamentally, they fail because the categories generating and organizing the data are socially constructed and reflective of an oppressive system that marginalizes certain groups of people.

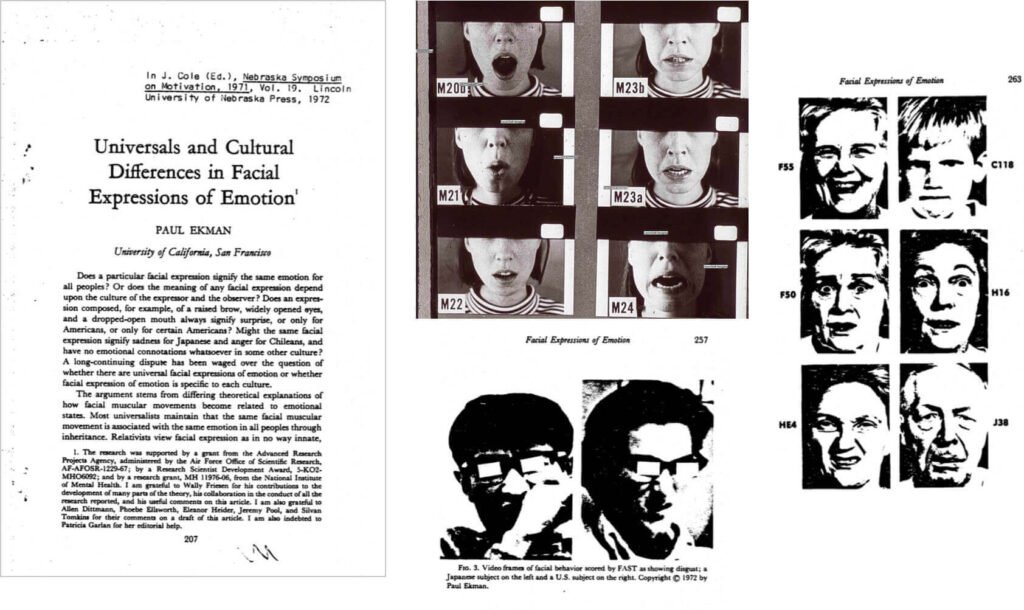

Affect recognition technologies are AI-powered systems designed to interpret human emotions through facial expressions, voice tone, and body language. The categories enabling these technologies are deeply influenced by the legacy of physiognomy, a practice based on the premise that one can discern a person’s character or emotional state from their physical appearance, particularly facial expressions. This connection to physiognomy, as highlighted by Crawford, is problematic because it embeds the technologies with the same racist biases and inaccuracies that plagued the historical practice, which has been discredited as pseudoscience. The reliance on what Paul Ekman identified as universal facial expressions for detecting emotions further complicates the efficacy of these systems. Despite Ekman’s contributions to understanding emotional expressions, the application of his findings often overlooks the nuanced and culturally specific nature of human emotions.

The assumption that algorithms can neutrally interpret emotions from facial expressions ignores the subjective and socially constructed nature of data categories used to imagine, develop, and employ these systems. Under the guise of objectivity and the naturalization of a one-world ontology, these technologies reinforce stereotypes and perpetuate marginalization, erasing worlds that do not conform to “the standard” and depriving people of vital services and opportunities. When applied to AI, this translates in the automated marginalization and oppression of entire ecosystems and social groups of people.

To counter such epistemic dominance, decolonial thinkers and activists have long been arguing for post-Cartesian frameworks that focus on epistemic disobedience, plurality, and relationality. These accounts have become increasingly recognized for understanding and resisting the rapidly spreading AI industry, whose functioning is entirely based on the automation of categorization.

Among them, Arturo Escobar argues for a “pluriversal” approach to design and technology: to acknowledge and incorporate diverse knowledge systems and world views, allowing a relational interaction with human and non-human worlds. In a similar vein, Marisol de la Cadena–also an anthropologist–provides ethnographic accounts that challenge the dominant Western narratives and scientific classifications. In Earth Beings: Ecologies of Practice Across Andean Worlds (2015), she highlights how indigenous knowledge systems often encompass a more integrated view of nature and culture and recognize non-human entities’ agency and relational existence in ways that Cartesian dualism and Western scientific categories typically do not.

Ideologies and Beliefs

As a common thread throughout the Atlas she charts, Crawford identifies a specific set of ideologies and beliefs that shape and inform the AI industry. In particular, in the book’s epilogue, she explores the contemporary ambitions for space colonization. Here, Crawford argues that these ambitions are deeply rooted in the same ideologies that drive the development and deployment of AI on Earth: colonial ideologies centered on domination, expansion, and exploitation.

On the developmental side, AI systems are often designed and trained to categorize, predict, and control, mirroring colonial practices of mapping, managing, and exploiting. They are then assembled by extracting natural resources, data, and labor. Finally, these systems are deployed in ways that often reinforce existing power structures and are used to surveil and control populations in ways that echo colonial governance tactics.

Although Crawford does not seem eager to use the parallelism of colonialism to explain current AI practices, throughout the book, she does, in fact, show how this is the dominant ideology shaping the AI industry.

First, the extraction and exploitation of rare minerals and natural resources are driven by capitalist imperatives for accumulation and profit maximization, which are a direct continuation of a history of colonialism.

Second, the AI industry extracts and exploits human labor, guided by the ideology that values efficiency and productivity over workers’ well-being and fair treatment. Here, Crawford traces the widespread practice of AI-powered worker surveillance back to the Industrial Revolution, which demanded controlling workers for “greater synchronization of work and stricter time disciplines” to appease the growing capitalism-driven culture that praised “early rising and working diligently for as long as possible.” Another genealogy traced in the book is one of colonial slave plantations, where “oversight meant ordering the work of slaves within a system of extreme violence.” Similarly to both lineages, the AI industry–by designing and producing technologies aimed at surveilling–and the embedment of AI in work environments–by surveilling workers and auto-dictating their work–reflect and reproduce capitalist and colonial id.entry-content img, .entry-content figure {display: block!important; align-self: center!important; align: center;}eologies of control, order, and exploitation.

Third, as far as data is concerned, AI technologies facilitate the extraction and processing of vast amounts of data from individuals and communities, often without their consent or equitable benefit sharing. These are modern digital equivalents of resource extraction. The AI industry sees Internet content as theirs for the taking. As noted by Ulises Mejias and Nick Couldry in their newly published book, The Data Grab (2024), AI corporations treat data about human life as “just there” to be taken for their profit. This data extraction is critical for training AI systems, which, in turn, are used to optimize the extraction process further, creating a cycle that mirrors the feedback loops of colonial accumulation.

Fourth, the story Crawford tells about Samuel Morton, an American craniologist who classified and ranked human races by examining skulls, clearly shows how the categorization processes integral to modern data-driven technologies are deeply rooted in an ideology of colonialism, racism, and Western-centric epistemology.

While the colonial ideology at the heart of the AI industry is exemplified by extraction, exploitation, and categorization practices, it is also mirrored by state power. Crawford sheds light on the alignment of AI systems with the states’ surveillance, control, and militarization objectives, illustrating the pivotal role of state ideologies in the deployment of AI.

Governments worldwide are investing heavily in AI for purposes ranging from public administration and service delivery to national security and defense. This deployment is driven by a belief in AI’s potential to enhance state efficiency, maintain order, and ensure the state’s competitive edge on the global stage. On the one hand, it is important to critically assess the use of AI for public service delivery for the reasons illustrated earlier, which include the potential for systemic encoded biases and inequities embedded within AI systems to exacerbate existing social divides. When governments deploy AI technologies for purposes like welfare distribution, healthcare, and education, the risk is not merely in the efficacy of these systems but in their inherent (and inevitable) biases that reproduce the “matrix of domination.”

Refusal

While AI is frequently utilized for profit and commercial gains, an increasing number of public institutions are promoting the use of automated systems for “the public good.” Framing datafication as a process for public purposes is usually regarded as much more meritorious than doing the same for private interests.

However, datafication processes for the public sector present a series of widely recognized issues mainly revolving around concerns about privacy and consent, bias, and digital access and literacy. The solutions to these problems are generally related to regulation and ethical principles, transparency, and more digital education and infrastructure, respectively. To address these in an internationally concerted way, a growing number of responsible data governance frameworks are emerging at national, international, and global levels, highlighted by initiatives such as the UN Resolution on AI adopted in March 2024.

A public policy strategy usually seen as an example of “responsible” use of digital systems for public purposes gives people the choice to share or not share the data about them. For instance, the City of Barcelona advocates for citizens’ data control and ownership, thus characterizing data-sharing processes as voluntary and giving everyone a chance not to engage.

However, it seems worth considering the power structures at play within such a paradigm. More specifically, it seems reasonable to question whether all people inhabiting the city (of whom only a portion are citizens) do, in fact, have the same capability to exercise the rights to data ownership offered by the city. Indeed, in an urban environment where data is voluntarily shared, it seems inevitable that the resulting dataset–which would inform the delivery of public services–will turn out skewed and partial. Moreover, it is crucial to address the fact that not everyone can even produce the data necessary to be taken into account and represented in the datafication of the urban space. Indeed, it seems fundamental to address the question of the poor, the not-digitally-adept, those who live with disabilities, and many other members of society who might want to but do not have the means to turn themselves into a series of data points.

Although a certain degree of freedom of choice seems to empower those categorized as citizens (which is a very specific, restricted group of people), it is important to note how it also entails the risk of basing city governance on a remarkably non-representative urban dataset. Consequently, city-level decisions might be taken according to the needs of those who can and choose to share their data, ultimately penalizing those who do not. Clearly, this dynamic involves crucial ethical questions, particularly about the right to refuse and resist the datafication model. Are those truly viable options for marginalized individuals and communities?

Refusal to engage with certain technologies presents a form of resistance against invasive data practices, particularly among communities who feel disproportionately targeted or disadvantaged by these systems.

Building on the challenges identified, refusal to engage with certain technologies presents a form of resistance against invasive data practices, particularly among communities who feel disproportionately targeted or disadvantaged by these systems. For example, individuals in communities interviewed by and involved with the Our Data Bodies project have developed strategies to mitigate the impact of surveillance. Some have opted to limit their digital footprint by avoiding unnecessary digital interactions and services known for extensive data mining. Others engage in deliberate acts of data obfuscation, such as providing misleading or incorrect information to confuse data aggregation algorithms and protect personal privacy. In The Data Grab, Mejias and Couldry also identify a series of social groups that are exercising refusal in different ways, including citizens pressuring politicians to enforce bans on AI-based facial recognition systems, workers unionizing against inhumane working conditions, activists organizing against AI-based human rights violations, and indigenous groups identifying ways to repurpose and re-appropriate technology for their own social justice battles.

Despite the value and necessity of individual acts of refusal, there remains a pressing need for more systemic solutions that challenge the matrix of modern colonial power that characterizes the AI industry and its governance as a whole. This order, deeply dependent on the datafication, standardization, and classification of human and non-human worlds, calls for an urgent reevaluation of the frameworks and policies that govern and underpin its workings.

To do so, it seems necessary to understand the various actors and ideologies that enable all of it. In Atlas of AI, Crawford begins to do precisely this: by tracing the origins and impacts of AI from the extraction of raw materials to the deployment of algorithms, she unpacks the structures and mechanisms through which AI is produced and employed at the global level, revealing the economic, environmental, and social costs embedded in these processes.

To deepen and broaden this understanding, as well as to imagine alternative futures, it may prove beneficial to integrate insights from the adjacent fields of political ecology, indigenous knowledge theories, and decolonial studies, as well as from resistance practices that challenge dominant narratives and power structures. These offer long-standing critical perspectives that may help us contest the dominant paradigms of AI-based knowledge production centered in the Global North, valorizing and integrating the diverse epistemologies that are marginalized and erased by modern data practices.

Endnotes

- The amount of carbon produced by computing activities is linked to both the amount and type of electricity consumed, quantified in terms of grams of CO₂-equivalent (CO₂eq) per kilowatt-hour. CO₂eq serves as a universal metric for comparing the global warming impact of different greenhouse gasses. ↩︎

Sara Marcucci

Sara is a researcher working at the intersection of technology, policy, and social justice. She is currently researching the ecocultural implications of the AI industry and the right to refuse it and imagine radical alternatives.